Most guides on detecting AI images are from 2022 and have aged like dinosaur milk.

“AI can’t draw hands.”

“AI can’t draw straight lines.”

“AI can’t spell words.”

This is fantastic advice…assuming you still live in the year 2022. However, studies show that 46% of people have upgraded to a later, worse year called “2024”, and if you are one of them (condolences), this no longer applies. You cannot rely on AI images having such egregious errors. So what can you rely on?

There are still some “tells” of AI imagery—many are strangely getting worse as the technology advances—but often they’re not errors so much as they’re “conceptual tension.” Basically, an image generated by (for example) Midjourney has several different priorities:

1) fulfill the user’s prompt

2) look coherent

3) look attractive

4) satisfy a moderation policy

5, 6, 7 etc) do a bunch of crap the user isn’t even aware of.

This is a lot of plates to juggle, and if the goals clash (ie, the user prompts for something ugly or incoherent), the image can get subtly pulled in different directions. I’ll show examples of what this looks like soon, but this sense of confusion—and not extra fingers—is arguably the best way to spot AI images now. It’s not a smoking gun. It can be subtle, and requires a careful eye. But sadly, this is an age of photorealistic fake media, and we must learn to be careful.

Keep an eye on what your older relatives are doing on social media, by the way. They might not be safe.

PSA: Delete Facebook

Facebook is the internet’s fecal tract. A viscerally unpleasant place, but you can learn a lot about what’s making the online world sick from studying it. And right now, it’s inundated by AI image-spam.

As we speak, millions of retirees are blissfully thumbing their way down a black sewer of nonexistent houses, fake people, and assorted nightmares.

These pictures are bizarre. Many don’t even try to look convincing. Some appear to be the work of aliens with only the faintest understanding of our world.

What’s “peach cream”? Who makes their own birthday cake? Why does history’s second oldest woman have prison tattoos? Why does the cake say “386”? Why is there a gun in my mouth?

(Without getting distracted by this, it’s probable that these accounts are run by the same few bad actors. The repetitive phrasing and subject matter is telling—I’ve seen dozens of cakes made with “peach cream and filling”—which makes me wonder about their sky-high engagement numbers. If you have the technical know-how to manipulate dozens or hundreds of fake accounts, you might also be viewbotting.)

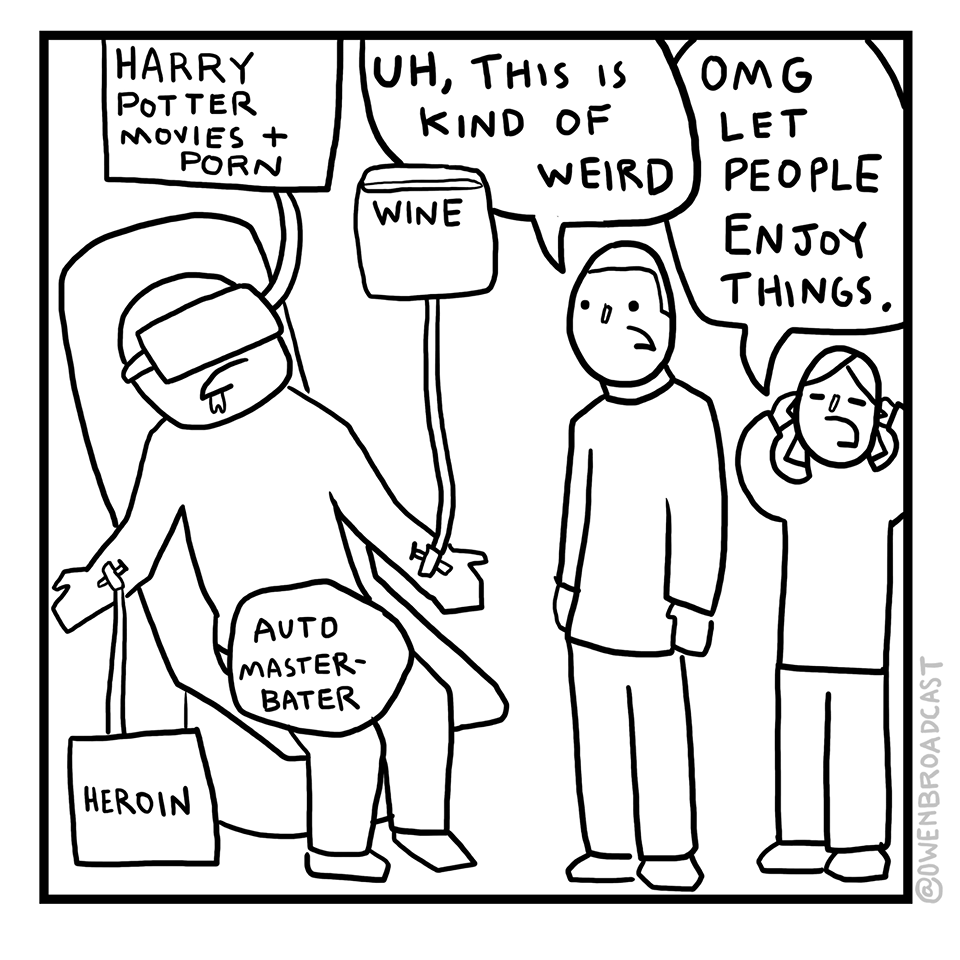

Should we care about this? Even if you have a “let people enjoy things!” outlook on life…

…It’s never a good feeling to see an older relative losing touch with reality. And suppose this is all the front for a scam? I’m not sure this alien race of 396-year-old cake-baking prison-tattooed grandmas has our best interests at heart.

But when you tell a boomer that an image is fake, they might ask “How do you know?” And that’s a fair question: how do you know? Vibes? Because that’s the world we live in? Some AI images are incredibly realistic. It’s easy to get stuck in a Twilight Zone of “I know this is fake but I can’t explain why.”

This is an AI generated image. Can you find any mistakes?[1]Tricky, isn’t it? Here’s my stab at what’s wrong with it. First: it’s convenient that the photo is too blurry for us to see fine details. I don’t say … Continue reading

In an age of photorealistic imagery, here are what I see as the signs of AI fakery.

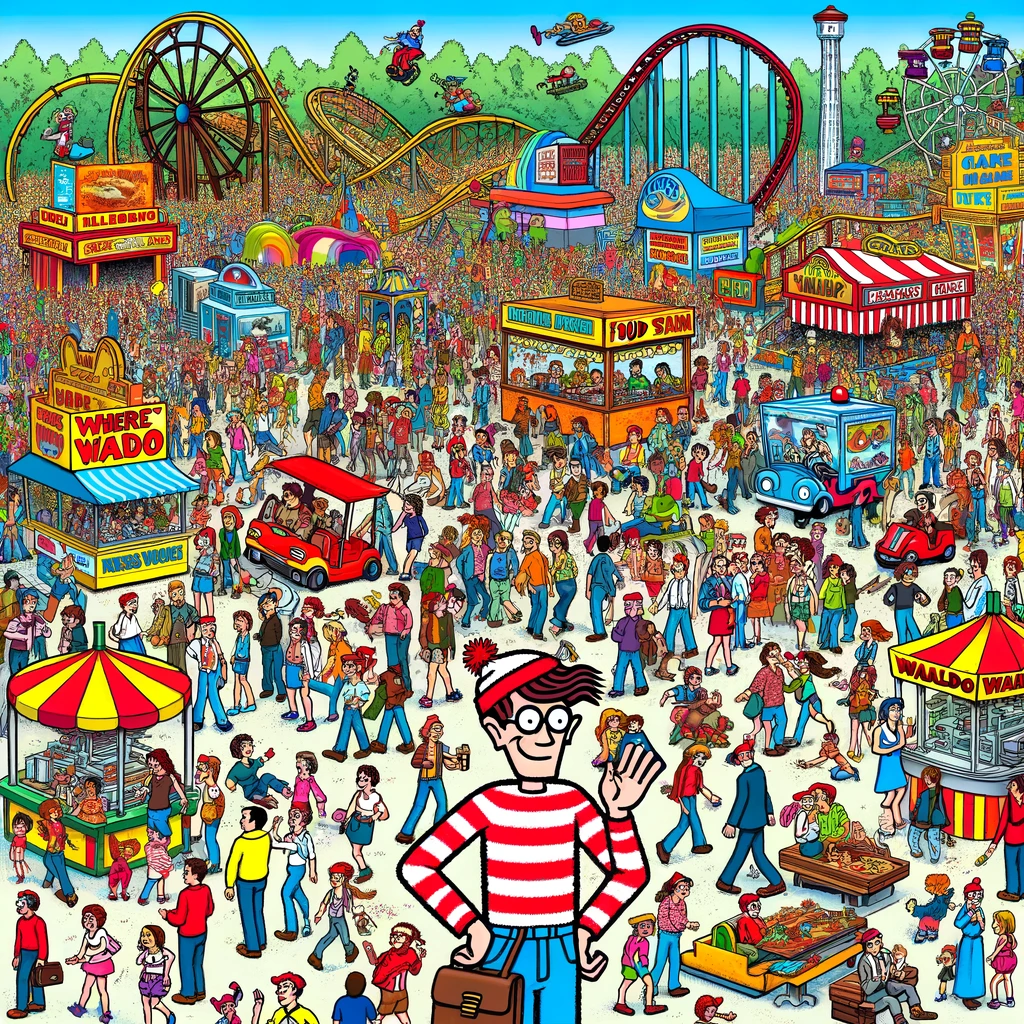

AI Images Are Excessively Archetypal

When you prompt AI for a cow, it’s never content to just give you a “cow”. It gives you the cowiest cow that ever cowed. The God-emperor of cows. A divine bovine. A cow with every “cow” trait cranked to the max on its stat sheet. A cow-icature.

You’ll always get a Holstein or a Jersey, because those are the most famous cow breeds. It will be standing in a green field on a farm, because that’s where cows live. There will be other cow-related details thrown in (like a red barn in the background, or a bell around its neck). Everything will be cow cow cow.

The image above is by Dall-E3. Midjourney V6 is more realistic[2]I have long suspected that Dall-E3 images look shiny and fake on purpose. Sadly, there will be a major tragedy or crisis triggered by deepfakes at some point, and OpenAI doesn’t want one of … Continue reading but has similar issues.

Contrast these perfect AI-generated cows with an actual photograph.

A real cow does not exist as a perfect Platonic archetype. It has a certain amount of not-cowness mixed in: flies, a crooked horn, a limp, asymmetry, visible scars, or whatever. AI seldom (if ever) depicts these details, because they constitute deviance from the prompt. The user did not ask for a cow with a crooked horn. They asked for a cow.

Synthetic images have little of what Roland Barthes called “The Reality Effect”—the thousands of small imperfections that make an object seem “real”. AI apparently needs to see a lot of something in its pretraining data before spontaneously including it in an image (note how infrequently Midjourney remembers to put a tag in the cow’s ear, even though nearly every real-life cow has one). When you see an image that’s just a perfect depiction of something—and nothing but that thing—get suspicious. Real life is never that perfect.

AI image models struggle with subtlety. They can mimic almost any art style, but it’s usually a loud, witless parody of that style. The concept that a picture might have depths—that its theme might require thought and interpretation, instead of being blared at the cheap seats—is foreign to them.

I find a lot of AI art really tiresome, to be honest. It’s the visual equivalent of being shouted at through a bullhorn. Human-created artwork is often subtle and seductive: the longer you stare at “Too Late” by Windus, the deeper you fall into its world and story. AI artwork often just feels like disposable junk: a loud surface with nothing substantial underneath.

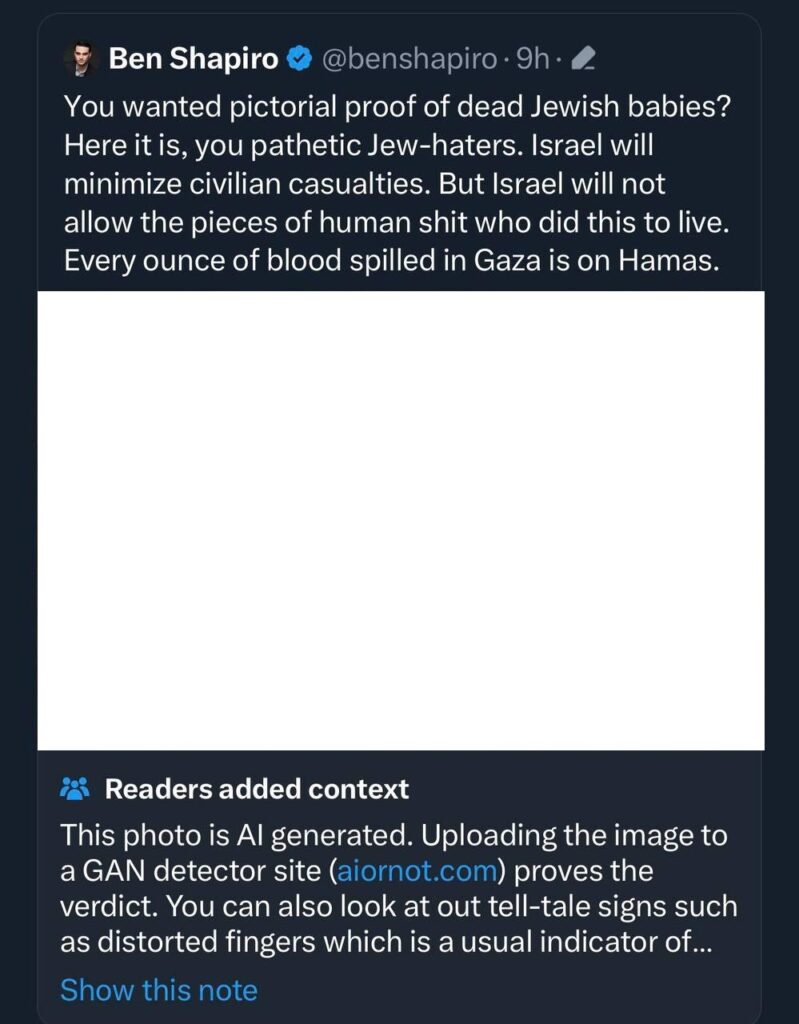

Going in a darker direction, on 12 Oct 2023, the office of Israeli Prime Minister Benjamin Netanyahu shared a photo of what’s believed to be a burned baby.

(Content warning: burned baby.)

This is a horrifying image, and certain ideologically motivated individuals claimed it was an AI fake. They even got a Ben Shapiro retweet tagged with a community note, stating that this had been “proven”.

I took one glance at that image and thought “real”.

The reality effect is like a slap in the face. You see those sad little smudges of ash on the rails? Those random dust bunnies (or whatever) against the skirtingboard? AI never puts things like that in an image. When AI makes something dirty, it does so in a stylized, aesthetic way. The dirt will look like it was brushed into place by a professional set designer. Those random smudges in Netanyahu’s photo are too anticlimactic and “incidental”.

Also, if you could prompt an AI image generator for a “burned baby”, you would get something that unmistakably looks like a baby. Not a hunk of charcoal that barely looks like anything.

Other people cropped a few pixels from the image, uploaded it to AIorNot, and got a verdict of authentic, thus “proving” not a damned thing, because AI detectors are trash. They do not work.

I am not saying Netanyahu’s photo is definitely real. It could have been faked through some other method (not that there’s any reason to believe it was). But anyone calling this image “AI generated” is either lying, or being a liar’s useful idiot.

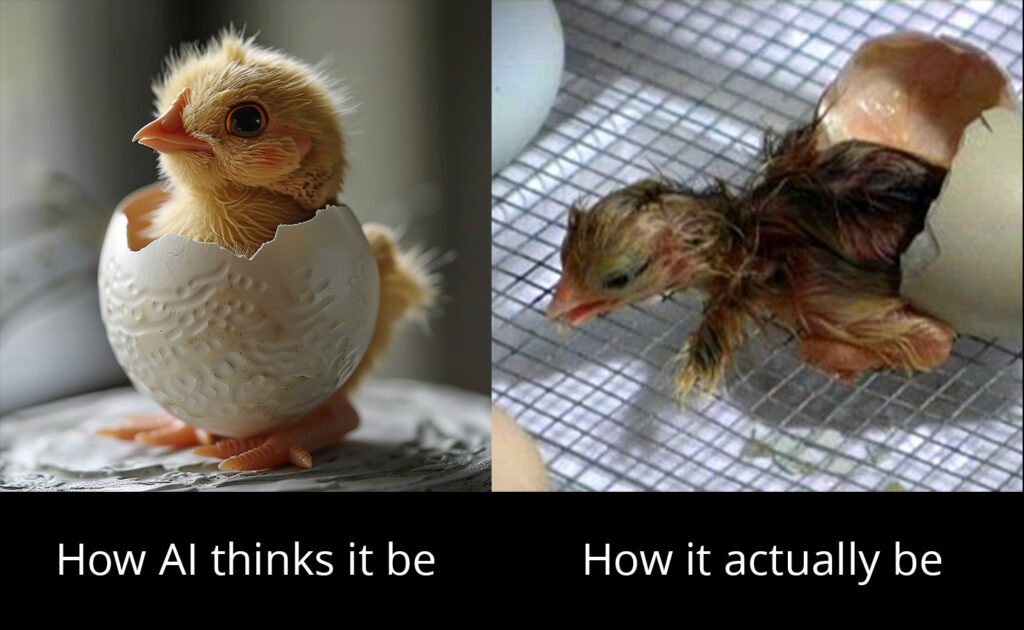

Nothing is Ugly. Everything is Beautiful.

In moments of doubt, ask: “Is there anything about this image that should be ugly, but isn’t?”

Every real photo has some ugliness. Zoom in on the nose of the real cow above, and it’s packed with dried snot.

Compare with Midjourney’s bovines, who thoughtfully blew their noses before being photographed.

I have mentioned the lack of flies. Also, the grass isn’t littered with piles of shit. Anyone who’s seen a cow knows how unrealistic this is.

AI models have a strong aversion to gross or nasty things. They struggle to capture the mundane grubbiness of real life, except in a stylized way. Prompt for an ugly person, and you either get a fantasy goblin… (Midjourney V6)[3]Midjourney also has a “–style raw” flag, which supposedly omits the model’s “beautifying” process. It didn’t help much. To Midjourney, ugly person = DnD … Continue reading

…a “gritty” character actor in a Coen Brothers film that women would probably thirst over if he was real… (SDXL)

…or a refusal. (Dalle-E3)

Genuine, untrammeled ugliness is so rare that it sometimes goes viral. I remember when Loab became a thing. Someone claimed that by prompting SD a certain way you could uncover a paranormal cryptid in latent space.

As was noted by feminists, in most pictures of “Loab” there’s nothing supernatural about her. She’s not a demon or Lovecraftian monster. She’s simply an unattractive, unsmiling woman with a skin condition! People like Loab are everywhere in real life—I see uglier faces every time I go to a hardware store—but they’re strikingly rare in AI-generated images. So rare that they can literally be passed off as paranormal monsters.

Look For “Concept Merge”

This is awkward to explain, but you’ve seen it before.

Basically, it describes the AI tendency to get stuck between two contradictory ideas. Rather than choosing one or the other, it mashes them together. Here’s an image I swiped off Facebook. (I’m not crediting any of these losers.)

Looks good, but what are those objects on the wall? Brightening the image…

Are they frying pans? No, they have shield bosses. But if they’re shields, why do they have frying-pan handles?

This is concept merge. The prompt was clearly something like “a rustic cabin with a Viking boat in it”, but when it came time to add objects to the wall, Midjourney had a choice between “frying pans” (appropriate for a rustic cabin, inappropriate for a Viking boat), or “shields” (appropriate for a Viking boat, inappropriate for a rustic cabin). It got stuck, couldn’t make a choice, and eventually created weird frying pan/shield combinations.

Concept merge is a frequent feature of AI images, like the one below. It was shared on Twitter by someone who was convinced it was perfect, with no AI mistakes.

Obviously it has many: the girl has three nostrils, weird small gerbil-teeth, hair made of transparent plastic (note the left eyebrow visible under her bangs), deformed egg-yolk irises, and hairstrands that combine, multiply, and flow against physics in dozens of places. She has the generic “sameface anime girl” look endemic to a thousand LORAs such as Wowifier. The image makes incongruent stylistic choices: her exaggerated facial design (huge eyes + tiny nose + pointy chin) looks odd next to the realistic-looking skin and fabric.

But most striking are her clothes. What is she meant to be wearing?

- A studded leather jacket?

- A crop top?

- A schoolgirl sailor fuku?

StableDiffusion couldn’t make up its mind…so it gave her all three at once!

Human artists do not make mistakes like this. Even the shittiest artist does not draw two totally different things at the same time. AI images split the difference and compromise. Faced with two choices, they dive right down the middle.

Concept merge is most obvious in small details. Look for least important thing in the image that’s still a distinct object. Often, it will be a couple of different objects smushed together.

Bricks. Tiles. Planks.

This will be fixed some day, but right now, AI cannot do tesselated patterns. Even the task of depicting a chessboard or a brick wall defeats every model.

It’s a classic case of attacker’s advantage. Tesselated patterns are defined by their uniformity, and every brick the AI renders must be perfect. A 95% success rate isn’t good enough: one malformed brick gives away the illusion. The AI has many chances to fail and only one chance to succeed.

So whenever you’re on the fence about an image, look for a fence in the image. Or a brick wall. Or roof tiles.

“Honey, can you go to Home Depot and pick up a single brick that’s about 4 feet long and bendy in the middle? You know the kind.”

“We’ll also need a perfectly square wooden plank for the floor.”

(This is more “concept merge”, by the way. The prompt was probably “a kitchen in a rustic cabin”. Rustic cabins have wood floors, but kitchens are usually tiled, so the planks assume a weird tile-like character.)

What other things can you look for?

Buttons. Is a row of buttons on a jacket spaced uniformly? Or are some further apart than others? In the grandma cake image, note that the top two buttons on her cardigan are spaced closer together than the bottom two. Likewise, the knobs on the thing in the background are unevenly spaced.

Electrical cables and ropes and strings—particularly if there are multiple overlapping ones. Are they sensible? Can you trace them from place to place with your finger, or do they become scribbled tangled-up garbage?

Tree branches are a big one. They’re so complex that they often look like random scribbled nonsense, and AI depicts them as such. Zoom in and you’ll see something’s not right.

Some beautiful concept merge here too. Is the cabin made of logs or stones?

(The comments on that picture were depressing. “Ha! You’re gonna feel like an idiot when the river floods and your cabin gets washed out!” If Mount Stupid was real, Facebook’s users would be Tenzing fucking Norgay.)

Also, zoom in on chain links.

Again, this might be fixed next year, or tomorrow for all I know. But for now, AI struggles with thin, complicated objects that exhibit a coherent structure.

The wonderful thing about the real world is that physical laws apply everywhere, equally, to all objects. A speck of dust is no less subject to the law than the titanic mass of Jupiter. As is written in the gospels, the regard of God is upon even the smallest sparrow.

AI does not care for sparrows. It has a limited bucket of competence, and lavishes it upon the central, important subject of the image. The small things get forgotten about, so zoom in on them.

Learn Basic Media Literacy

I hope I do not sound condescending or sarcastic. But whenever something happens in the world of synthetic media—whether it’s the GAN deepfakes of 2017, or Sora in 2024—we see the same histrionic reactions, over and over.

“Facts are over. Truth is over. We can’t trust photos anymore!”

Why were you trusting photos before? Does Photoshop not exist? Can’t a 100% real picture still be used to trick and deceive? How does a naïf like you have any money left in his bank account?

As Randall Munroe once observed, every anxiety about deepfakes could be applied to words. “You mean people can just…lie to you? Say that the sky is green when it’s blue? Say that the moon’s made of cheese? Oh my God, nobody can trust anything! We’re in a post truth era!”

Yes, deception is a problem. But it is not insurmountable. It hasn’t spelled the end of truth as we know it. We can defeat liars, whether they use words or photos or (soon) videos. We need to take the natural skepticism we have toward words (“talk is cheap!” / “money talks, bullshit walks!”) and allow it to flow over to images as well. This is an easy thing to do. So easy that there’s no excuse not to be doing it already.

When you see a photo of something alarming, ask questions like:

- Where did this image come from? What is its source?

- If it’s a photograph, who took it?

- Has the person sharing it built up any social capital that would inspire me to trust them?

- How easy would this image be to fake? Would anyone benefit from doing so? (if unsure, default to “very easy” and “yes”)

- People never take just one photo of really cool thing. Can I find multiple photos, from different angles? Is it plausible that the Pope would go out in public wearing a puffer jacket and exactly four photos of it would exist?

- Is this photo even physically possible to take?

Someone shared this photo.

The pelican’s body looks AI generated (“fur or feathers” concept merge). I don’t know about the head. It looks far more detailed than any I can get out of Midjourney—did someone crop out a pelican’s head from a photograph, and paste it in using Photoshop? It doesn’t seem to connect to the body.

But there’s zero chance that it’s real. It’s implausible that a drone would snap such a high-quality photo, perfectly framed and lit. We’ve seen pictures of birds flying into drones. They look like this.

This is another case of AI getting too good. It creates a photorealistic image even when we wouldn’t expect one.

Postscript: How To Recognize AI Generated Text

Models Have a “Voice”

We all know “ChatGPT tone” by now. People mistakenly call it “bland” and “generic”. It’s actually the most distinctive writing style on earth. I can recognize ChatGPT-created text within the first 1-2 sentences.

“Dive into the fascinating world of “skub“, a mesmerizing concept that echoes through time like a haunting symphony, unraveling a captivating portrait of the human imagination. “Skub” is more than just a word. It’s a philosophy. A lifestyle. A poignant reminder that divisive concepts can also unite us. As we delve further into “skub”, it is important to be mindful of diverse perspectives, and to recognize that “skub” may be controversial among certain groups. Together, “pro-skub” and “anti-skub” activists weave a synergistic tapestry, an intimate dance that highlights the positives of “skub” while remaining mindful of its potential impacts. So, in conclusion, whether you wear a “pro-skub” or “anti-skub” T-shirt, let us stand together in solidarity and mutual respect, allowing our diverse viewpoints to forge a better, more inclusive tomorrow.

(I didn’t generate that, I typed it from memory.)

Nostalgebraiest once described this as a HR managerial fantasy of how humans talk. Peppy and upbeat, with a hint of supercilious smugness. Supremely confident, while being frantically neurotic that it could offend or confuse someone. It over-qualifies and undermines itself with weasel phrases and ambiguity. ChatGPT is so terrified of saying something wrong that it doesn’t care whether it says anything right.

ChatGPT has the most exaggerated writing style of any chatbot, but they all have distinctive voices. Gemini loves contrastive “it’s more than just [thing x], it’s [thing y]” sentences. When you ask Grok to diss something, it always says “you’re like a walking talking [x]”

Many other models are trained on synthetic data from ChatGPT (whether they know it or not), and echo ChatGPT’s pablum.

Lots of Filler Adjectives

AI models are addicted to pretty but empty adjectives. Everything is captivating or enchanting or inspiring.

These are filler adjectives. They are vague, nearly meaningless, and add virtually nothing to the text. Any writing coach would tell you to cut them.

Take “captivating”. Its definition is “capable of attracting and holding interest”…but that could be anything. Sunsets are captivating. Car wrecks are captivating. Crossword puzzles are captivating. If an adjective could equally be applied to love’s first kiss and to a weird bug smashed on a car windshield, then it’s probably better to drill down to the metal and explain exactly what makes the thing captivating.

AI-generated text abhors such specificity. It casts a broad net over the reader’s mind, encouraging them to add their own meaning. You might have your own idea of what “haunting” means, so when you read “he played a haunting melody on the violin” this can easily engage your senses in a way that seems profound. It seems like the AI is reading your mind! In reality, it’s something of a parlor trick. There were no magic slippers, and the profundity was supplied by you.

Sarah Constantin wrote about a thing called “Ra”.

Ra […] can roughly be summarized as the drive to idealize vagueness and despise clarity. […] You know how universal gods are praised with formulas that call them glorious, mighty, exalted, holy, righteous, and other suchlike adjectives, all of which are perfectly generic and involve no specific characteristics except wonderfulness? That’s what Ra is all about. […] Ra is evident in marketing that is smooth, featureless, full of unspecified potential goodness, “all things to all people,” like Obama’s 2008 campaign.

“All things to all people” is a good way of putting ChatGPT’s writing style. It appears to fluently speak dozens of languages. In truth, it speaks only one: Ra.

AI Text Is Tonally Inappropriate

If someone is using AI to write their social media posts, this is usually obvious, because the text will not match the tone expected of the conversation.

When a human enters a social space, they subconsciously “read the room”, and adjust their voice (or writing style) to fit. If everyone is using slangy contractions, they will too. If everyone’s writing in a stiff, formal way, they will too. Linguists call this a “code switch“. AI usually has no idea where its text will end up. Thus, it cannot read the room, cannot code switch, and its output will be very “general”, disconnected from the discussion going on around it.

This is easy to recognize in practice. @fchollet tweeted this:

“Accelerating progress” should mean increasing the breadth and depth of our search for meaning & solutions. Increasing the diversity and creativity of our cultural production and consumption. To a large extent, I feel like generative AI achieves the opposite. It collapses everything into a narrower, standardized, derivative space. It might even result in cultural stagnation if left unchecked. Not for too long though, since people will simply grow out of it, eventually. Humans have a built-in drive towards interestingness. They get bored quickly.”

He received a number of replies. One was written by an AI. Can you spot it? Read them carefully.

- Depends on the input, bland input=bland output.

- Yes. Several years ago I was impressed… even now I’m impressed, but I’m not interested anymore: meaningless texts, generic art

- We get bored too quickly

- Very well said.

- There’s a parallelism with sports betting: the most probable outcome is the less interesting, thus the smaller prize.

- And/or the AI may get better at producing interesting results

- Generative AI is a pattern extender. That’s it. This is a very good thing though.

- Certainly, generative AI can seem limiting in terms of truncating our creative scope. However, shifting perspective, we may see it as a tool that can enhance our own creativity. Much like a kaleidoscope, it’s our interpretation of AI’s output that truly shines.

- This is 100% about not allowing a better approach and a 1a trojan

- “people will simply grow out of it, eventually.” That sounds like an implausible prediction. (Did people grow out of their propensity to get sucked into addicting tech in other cases?). Is there a concrete outcome in, say, a decade, that would tell us if it was or was not true?

- Companies will pay people to do nothing but create so that they can give data to the ai

#8 isn’t a sore thumb, it’s an amputation. Aside from being generic feelgood blather (not that the human replies are necessarily any more insightful), it simply doesn’t look like a Twitter reply. Nobody says “however, shifting perspectives…” on the Bird Site.

(Plus, kaleidoscopes are hollow tubes containing angled mirrors and chips of glass. They don’t “shine”. Mixed-up metaphors are very common in AI writing.)

You can tell AI to imitate the a style, but that often leads to an unconvincing “hello, fellow kids!” affect (“Not gunna lie, some of those AI art pieces be lookin fire 🔥 tho lol”), burdened with excessive amounts of “style”. Conversational registers can get incredibly specific, and a failed mimickry attempt can be even more revealing than no attempt at all (I asked Gemini to write replies in the style of a /r/redscarepod poster. It came up with stuff like “The hot take I didn’t know I needed. Generative AI is like the cultural equivalent of the McRib.” Talk like that on /r/RSP and you’d get called a cop.)

Yes, some of those replies are innocuous, and wouldn’t attract notice. But if a bot constantly talked like that to an adversary dedicated to unmasking it, it would be exposed.

It’s easy for AI to fool a careless human. They were doing it in the 1960s. An observant one is a different matter. It’s the brick problem again: AI can get it right some of the time, and maybe even most of the time, but if it even slips up once…

“All of your clues can be defeated with about 5 seconds of prompt engineering. You know that, right?”

What do you want from me? If there was a bulletproof way to spot an AI image, I’d tell you. There simply isn’t one.

All of these hints are vague and “vibes” based. All require a close eye. All produce false positives (many photographers employ a staged, archetypal style. And ChatGPT had to learn its writing style from somewhere…) All can be overcome by prompting, inpainting, and Photoshop.

But there’s good news: most AI scammers are lazy too lazy to do that.

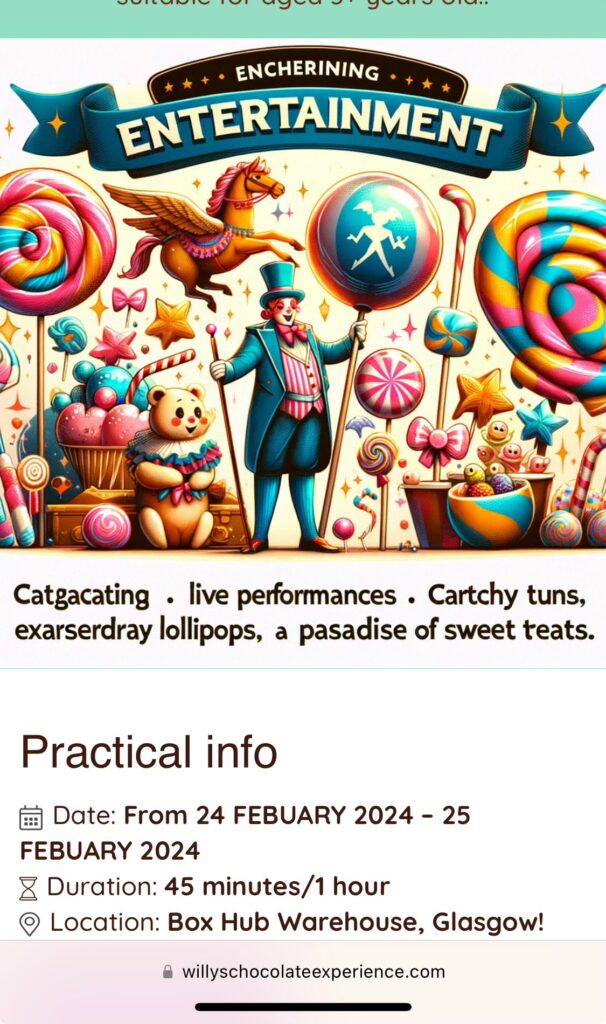

Whenever an AI-related scam hits the public’s eye, what’s striking is how flagrantly incompetent they are. They refuse to do any work to make their fraud believable. Remember that fake Willy Wonka event in Ireland? Remember how it had gibberish AI text on the flyers? This is your outreach! The one thing people see! It boggles the mind!

This is a level of laziness that I didn’t know existed. He could have easily fixed the text in Canva, or, hell, MS Paint. Even if he had zero graphic design skills, Fiver’s right there. It would have cost virtually nothing to have his goddamn ads look professional. Yet for the person running the event, “virtually nothing” was still a price too high.

And what about those repugnant Facebook images from pages called JESUS LUV U and the like?

This is not the work of a Machiavellian criminal genius. This is the work of an impoverished Malaysian contract worker, slaving away in a 45C cubical, creating 600 pieces of content per day so he doesn’t get fired or deported. He doesn’t care about quality. Why would he? It just has to be good enough to satisfy his boss (who also doesn’t care about quality, because he, too, is but a cog in a larger system. Hey, you look like you need to read Zvi on Moral Mazes. Understand its lesson, and the world will make about 17% more sense.)

There is no reason to do a good job in that scenario. It’s not as if he’ll win a trophy at the Scammy Awards this year if he makes an image look realistic. (“And the award for best deepfake goes to…Muhammad Awang! Keep it up, and we’ll print an extra visa for your sex-trafficked sister!”). Literally nobody cares.

There’s also the Nigerian Prince effect. Scam emails don’t actually benefit from being clever. They target the mentally ill, or people who don’t have a skeptical bone in their body. If you are capable of asking critical questions, you will never fall for the scam anyway.

Obviously really stupid con artists get caught. But are there smarter ones that aren’t being detected?

I don’t know. My honest impression, based on a glance at the rubbish flowing across my own Facebook feed, that >90% of these people are lazy, careless bottom-feeders. Click on their pages, and everything is bad. Look at the grandma baking a cake at the start of the article. The person prompting didn’t even take ten seconds to prompt a plausible number onto the birthday cake. Expecting them to fix fine mistakes in Photoshop is like expecting them to sail to the moon in a teacup or empty the ocean with a spoon. They are seemingly all like this.

Tech evangelist types are fond of telling us that AI has democratized software engineering, allowing non-programmers to implement their ideas. Well, that’s also true for frauds: specifically, lazy wannabes who would like to make money unethically but can’t quite figure out how to do so. AI—which can generate limitless text and imagery on command—is their Wonka’s golden ticket to a land of “ENCHERINING ENTERTAINMENT”. But they cannot escape who and what they are—incompetents—and give themselves away at every turn.

Horace once wrote “Caelum non animum mutant qui trans mare currunt”. Translation: if you suck, you suck. That’s my hope: that most criminals are just too lazy or dumb to do much with AI. Capabilities advance, but human ineptitude is forever.

References

| ↑1 | Tricky, isn’t it? Here’s my stab at what’s wrong with it.

First: it’s convenient that the photo is too blurry for us to see fine details. I don’t say “conclusive”, I say “convenient.” Disguising a fraudulent photo by making it too shitty to see is the oldest trick in the book. The way the second man’s jeans pile around his shoes looks unnatural—his legs seem too short for his torso. He looks like an Oompa Loompa next to the fourth man (in the red shirt), whose legs extend down further, out of frame. The far-right man is sticking a hand into his T-shirt’s hip pocket, which makes no sense, as T-shirts don’t have hip pockets. The third man has a lanyard hanging from his pocket, with a second lanyard sprouting off it. None of this is hard proof. Yet what are the chances of this photo occurring naturally? Where would you find five cartoonishly Midwestern-looking men, with identical jeans and shirts and hats, and with the exact same body type and fat distribution (note the matching manboobs), standing together in a parking lot, being photographed? There is simply no plausible natural context for this picture. Is it a staged photo, then? Are they contestants at a “Dress Like A Teamster” costume party (the winner gets a six-pack of Pabst Blue Ribbon or something)? But then we’d expect to find evidence of this party online (we don’t), such as more photos of these men (there are none), and we’d expect the photo to be high-quality, with all the men in frame, instead of a blurry cropped mess. And if they’re supposed to look the same, the red-hatted man would have taken off his wristwatch to improve the effect (he’s the only one who has one). This is a rare case of Midjourney including some Barthesian “Reality Effect”, with counterproductive results. This image is too arch, too perfect, too inexplicable. It literally doesn’t make sense, except as an AI generated image. |

|---|---|

| ↑2 | I have long suspected that Dall-E3 images look shiny and fake on purpose. Sadly, there will be a major tragedy or crisis triggered by deepfakes at some point, and OpenAI doesn’t want one of their models caught holding the murder weapon. |

| ↑3 | Midjourney also has a “–style raw” flag, which supposedly omits the model’s “beautifying” process. It didn’t help much. To Midjourney, ugly person = DnD kobold. Flagging “–no fantasy” gave me a Jabba the Hutt-esque creature. |

No Comments »

Comments are moderated and may take up to 24 hours to appear.

No comments yet.